Chaos Testing Azure Arc-enabled SQL Managed Instance's High Availability

Synchronous Transaction Replication and resilient replica recovery is something only a handful of Database Products have built up as a core-feature over the years. Many top rankers in this list do not have an answer to this difficult problem. Even the ones that do have a documented solution, it's pretty hard to compare and contrast how good the implementation actually is from studying docs/watching a demo or conducting a POC, without either:

- Living with them in Production for a while and accumulating lessons learned (or scars, if you asked a DBA); or,

- Observing the system's behavior under intense stress, the worst-imaginable failure conditions possible

Previously, I had only on set up Availability Groups or "AGs" on Windows Server Failover Clusters (AGs are basically SQL Server's answer to resiliency guarantees in case of engine-level failure). Besides running some one-off T-SQL commands to manually failover/failback, there was always a "fear of the unknown" in my mind, regarding what would actually happen, in case the underlying system and it's dependents completely fell over sideways. I also wasn't familiar with how to systematicaly orchestrate drastic failures in Windows, besides restarting a couple VMs or restarting sqlservr.exe from services.msc etc.

So recently, I was tasked with trying my absolute best to break SQL Server Availability Group by any means possible. The definition of broken is:

This time, I had Kubernetes on my side, with the SQL engine running in disposable Linux containers (Azure Arc-enabled SQL Managed Instance, Business-Critical Tier), and the latest-and-greatest Chaos Engineering software from CNCF - called Chaos Mesh - offering many novel Custom Resource Defintions that can be used to declare layers upon layers of controlled, systematic degradations (rather than one-off imperative scripts with a single entry and exit point that restart a VM and move on to cause some other mischief in a linear fashion, etc.).

Having pondered about questions like this about the scary unknowns of hosting data inside ephemeral containers (K8s Pods), I was feeling pretty good about my chances of discovering a failure-mode that triumphed sqlservr.exe(s).

High Availability Architecture and possible faliure points

HA Kubernetes Operator and Pendulum Analogy

Azure Arc-enabled SQL Managed Instance Business Critical tier, besides wrapping all SQL replicas behind an Availability Group Listener, comes with a dedicated Kubernetes Operator called the

arc-ha-orchestrator.An Operator is a dedicated piece of computer program responsible for monitoring the health state of some system it "operates", based on some system specific Finite-state machine definition (basically, business logic).

It then uses the observed signals to figure out the Current-State, and perform some small, pre-defined, intelligent actions to "nudge" (rather than force) the system towards a healthy (i.e. Desired) state. That system could be anything: SQL Server, a pendulum, a self-driving car etc.

- Kubernetes Operators are a very elaborate application of PID Controllers in Electronics that have been around since 1920s. Actually, all of Kubernetes is built on applying this idea as code.

The

arc-ha-orchestrator's sole responsibility is doing everything within its power to monitor and maintain the health of the SQL Server Availability Group it manages. It is the operator's life mission to ensure the AG never gets into an irrecoverable, i.e. corrupt state. If it senses this is about to happen, it will do whatever actions it has within its arsenal, to ensure the State Machine never gets into a corrupt state that cannot be recovered from. The way this is implemented is by applying the Paxos protocol.

This is the best visual analogy I can think of to visualize the arc-ha-orchestrator's responsibility (that is, if SQL Server Availability Group was an inverted pendulum we were trying to keep perfectly balanced while fighting Physics):

So comparing the responsibilities:

| C1 | C2 | C3 |

|---|---|---|

Operator | Responsibility | Enemy |

| Inverted Pendulum | Uses signals from it's various sensors to keep the inverted pendulum perfectly upright, despite abnormal turbulence from the ruler. If the pendulum is slapped really, really hard and knocked over to the point where it keeps spinning - although the motor isn't powerful enough to counteract the torque from the slap, after the spinning eventually stops, the operator (motor), and the system it manages (pendulum), is resilient enough to bring the pendulum back up in a healthy, upright state (assuming no intentional, permanent physical damage to it's motors or hinges). | Physics, and the guy with the ruler knocking things around |

arc-ha-orchestrator | Uses DMV queries, AG sequence_number, Pod health and other metadata as heartbeat from each SQL replica, keeps the AG in a best-effort accessible, and most importantly, recoverable, uncorrupted state. If the SQL Server replicas are slapped really, really hard (no more quorum, 2/3 replicas have their plugs pulled), the Operator has the power to deny connectivity to the AG listener, and once replicas sqlservr.exes come up, brings the replicas back to it's HEALTHY state automatically after the turbulent effects settle down (assuming someone didn't go into the Container and deliberately delete files, or bypass the AG listener and connect to the single instances and start creating/deleting transactions etc.). | Infrastructure failures (also Physics) the guy with the Chaos Mesh CRDs knocking things around |

HA Architecture

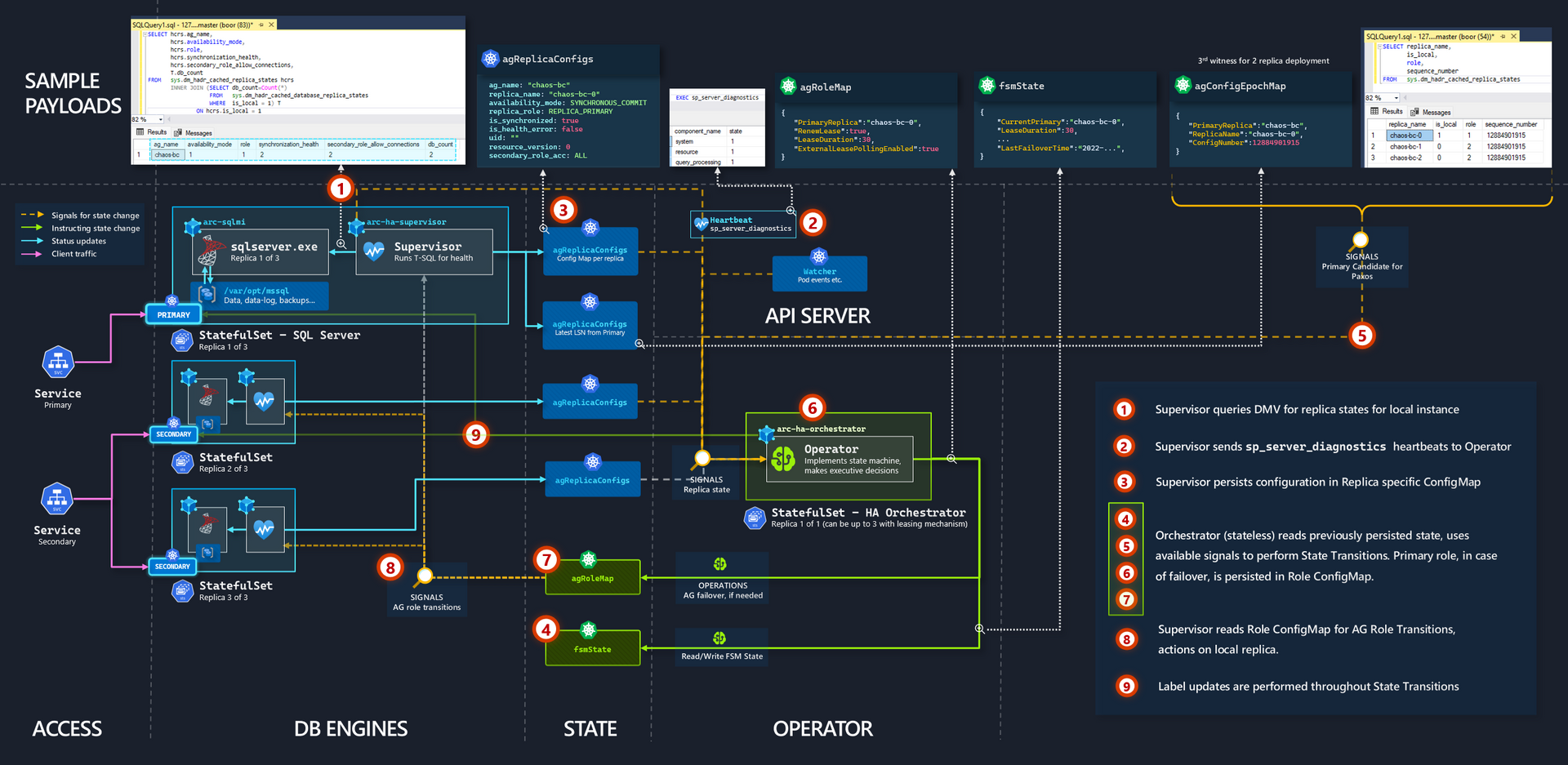

SQL Server Availability Groups is an extensively documented product (both from Microsoft - example, and from the SQL Server community - example). So instead of discussing the well-known mechanics, we focus on the key integration points that serves as the lifeline signals in Azure Arc-enabled SQL Managed Instance's High Availability Automation on Kubernetes, annotated below:

In short:

- Several well known DMVs (e.g.

sys.dm_hadr_database_replica_states) and Stored Procs (e.g.sp_server_diagnostics) are used to determine the health state of the Availability Group and Replica - As

arc-ha-orchestratoris a completely stateless app (we will test the validity of this statement 😉)ConfigMapsare used for storing the various system states, including that of the Finite-state machine. Labelsare used to route traffic between the Kubernetes ExternalServiceto the appropriate Availability Group Replica. In scenarios where replicas are resolving, labels are removed and then reapplied after the Paxos transition is successful.

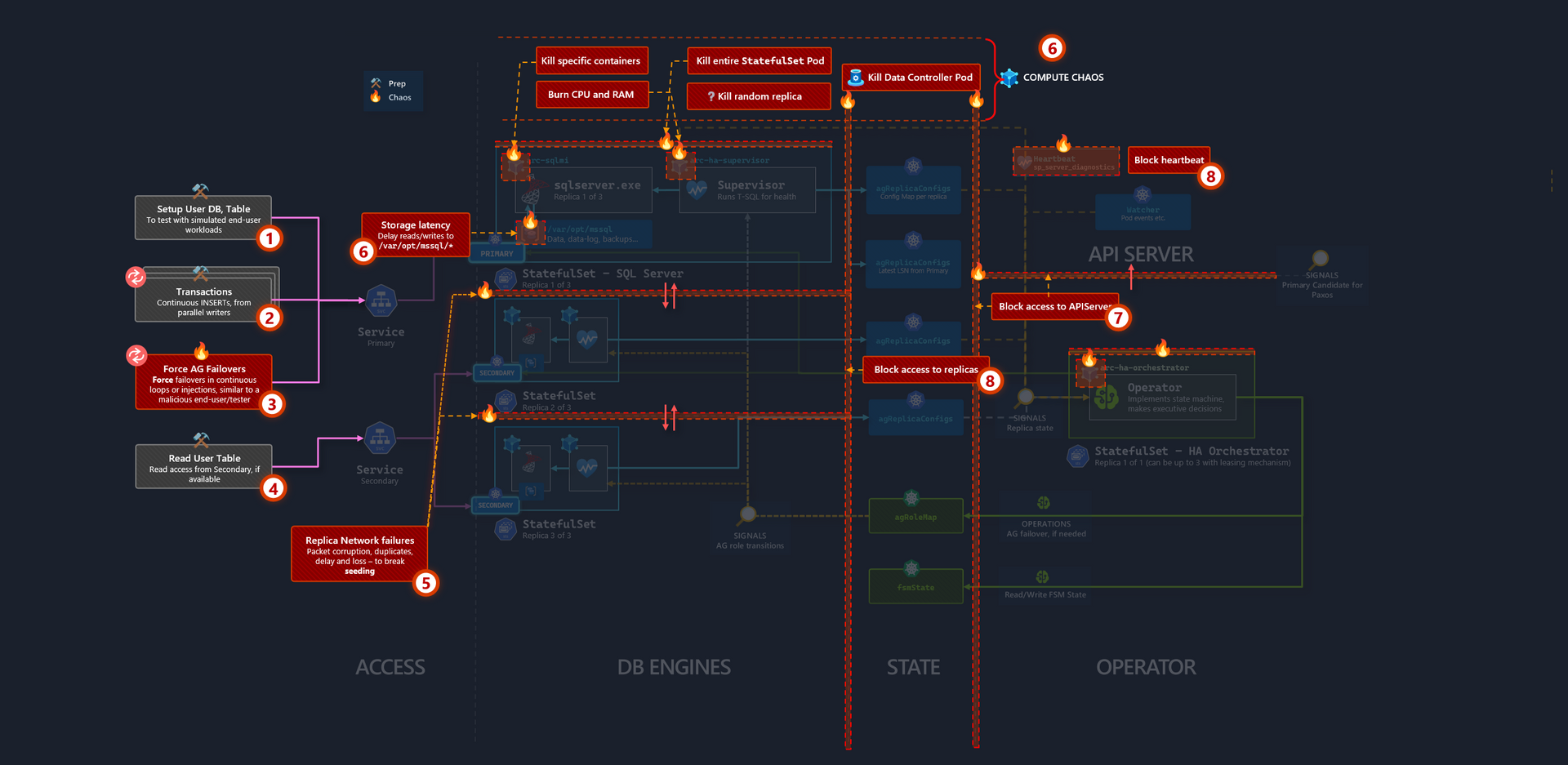

HA Architecture - failure overlay

One important point I learned about System Failure, is that when a key integration point is 100% inaccessible for prolonged durations, it's actually not that stressful/confusing for the system when trying to determine the path to go from Current (Unhealthy) -> Desired (Healthy) State. This is because, given the current signals, there is no traversable paths to being healthy again unless that key integration point is back. Since the system cannot know when that will happen, it stays dormant.

For example, if we completely shut down the Operator's network acces to ConfigMaps (i.e. to Kubernetes API Server), the internal exception handling mechanism dictates that it simply just sits there and refuses to perform State Transitions until it has the necessary Current State data it needs to transition (from the appropriate ConfigMaps). If it doesn't perform transitions, the system remains in the dormant, current state. This is good, because the system remains stable, albeit unhealthy - it's the equivalent to our Pendulum standing still at the bottom of the trajectory.

The same observation applies to sqlservr.exe. As tempting as it may be to completely simulate 100% corrupttion of /var/opt/mssql/data, or inject 20 seconds of latency per R/W operation, SQL simply crashes and refuses to start up in these hazardous conditions.

Therefore, if we want to try and influence the Operator or SQL Server to make faulty decisions, it's important to inject periodic, pointed, partial failures - in some specific sequence, or in parallel.

So looking at the architecture diagram, we can identify hypothetical failure points, to serve as the basis of our Chaos Test:

Knowing the fact that the Operator depends on ConfigMaps to store state, it would have been wicked fun to update the content of the ConfigMap with inaccurate payloads and see how the Operator reacts. But unfortunately, since that violates the rules of engagement (equivalent to breaking the pendulum motor), we make do with the next best strategy - referring to the diagram annotations:

Setup a User Database with a simple table, that gets onboarded into the Availability Group.

A mechanism to sporadically, and/or continuously, rapid insert transactions into the User Database (e.g. multiple write workloads, with infinite loops)

This one is a fun one. Since Azure Arc-enabled SQL Managed Instance supports user initiated manual failovers - i.e.:

ALTER AVAILABILITY GROUP current SET (ROLE = SECONDARY)We should have some compute that can repeatedly demand failovers against the AG listener from replica to replica, all while writes are happening in 2.

Compared to everything else, this isn't really stressful, but some read workload from secondary.

SQL Replica to Replica network corruption, duplicates, increased latency and packet loss. The idea here is to throw off replica seeding.

Inject R/W latency into Storage at

/var/opt/mssql/**.Partially block the Operator's access to APIServer, which impacts the watcher, and ability to read or update state

ConfigMaps.Partially block 2-way access from Replicas to Operator, which also impacts receiving heartbeats.

The idea is, if the system can survive this onslaught of partial failures that forces it into an unhealthy state and recover, it's probably pretty resilient.

Chaos Experiment

Introduction

Chaos Mesh is an Open-Source, CNCF Chaos Engineering Platform built for Kubernetes. It was created by the founders of TiDB, a distributed HTAP Database. This is why it's an amazing fit for SQL Server as well, both being Database Engines, share similar failure points Chaos Mesh is designed to attack.

In short, Chaos mesh is easily installable via helm, and comes with a variety of failure injection CRDs that line up with the failure annotated diagram above.

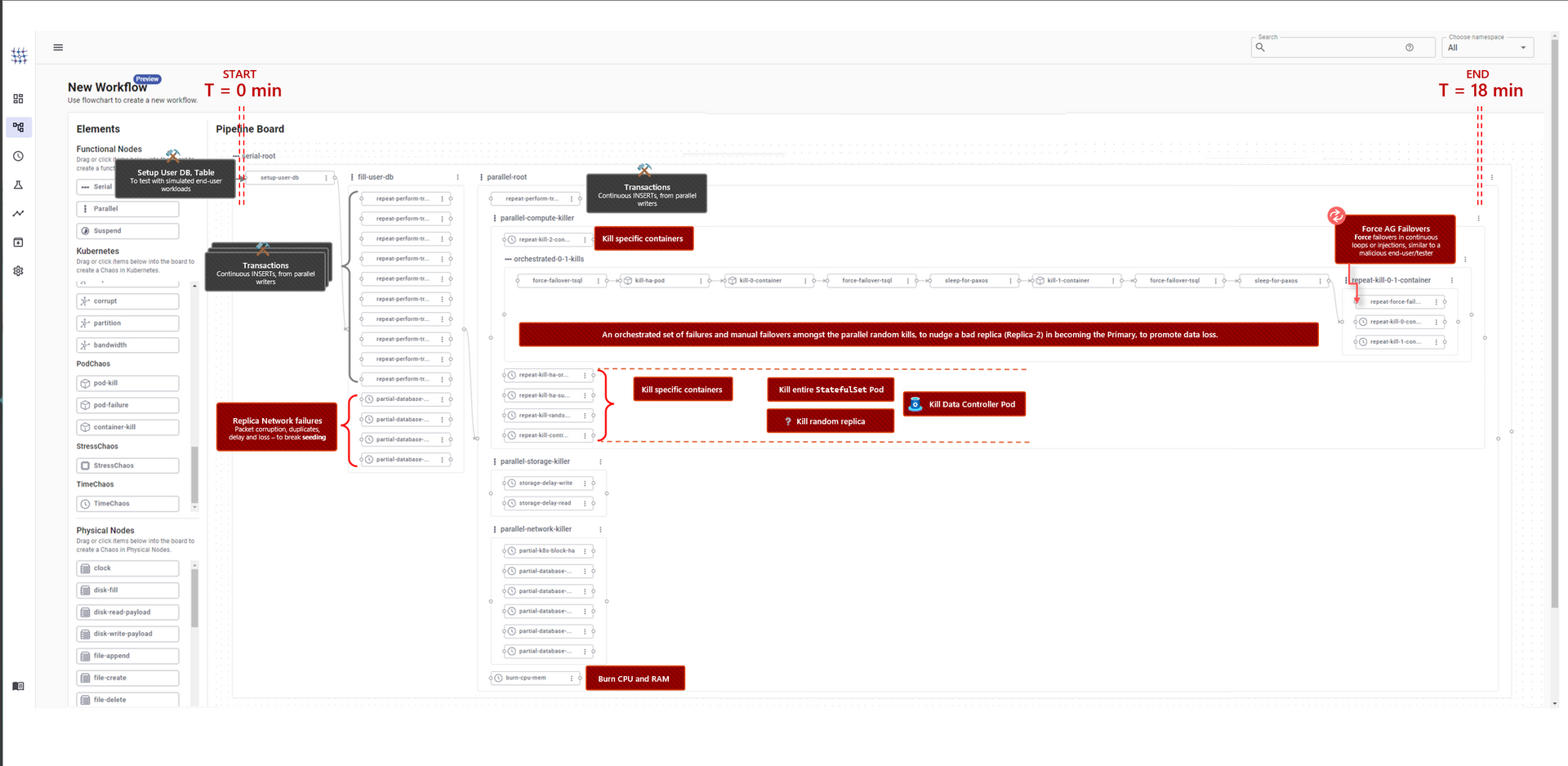

The component that makes Chaos Mesh extra-special is, it's possible to create elaborate, no-code experiments using a workflow-based UI, then export out the Custom Resources as a YAML manifest, which means the same experiment can be automated as part of any Continuous Integration pipeline. The concept is identical to Argo Workflows, and was actually inspired by Argo.

Generating the experiment

The failure modes above are constructed in the Chaos Mesh UI:

Running the experiment

- Running the Chaos experiment is a two-step process.

Step 1: Create the SQL instance (~3 minutes):

az sql mi-arc create -n chaos-bc \

--cores-request 1 \

--cores-limit 1 \

--memory-request 2Gi \

--memory-limit 2Gi \

--tier BusinessCritical \

--replicas 3 \

--resource-group ...Step 2: Apply chaos and wait til experiment ends (~18 minutes):

kubectl apply -f sqlinstance-ha.yamlThe manifest targets the chaos-bc instance using label selectors that are part of the manifest.

Demo: Observing the experiment

The end-to-end Chaos experiment execution is demonstrated here:

Summary

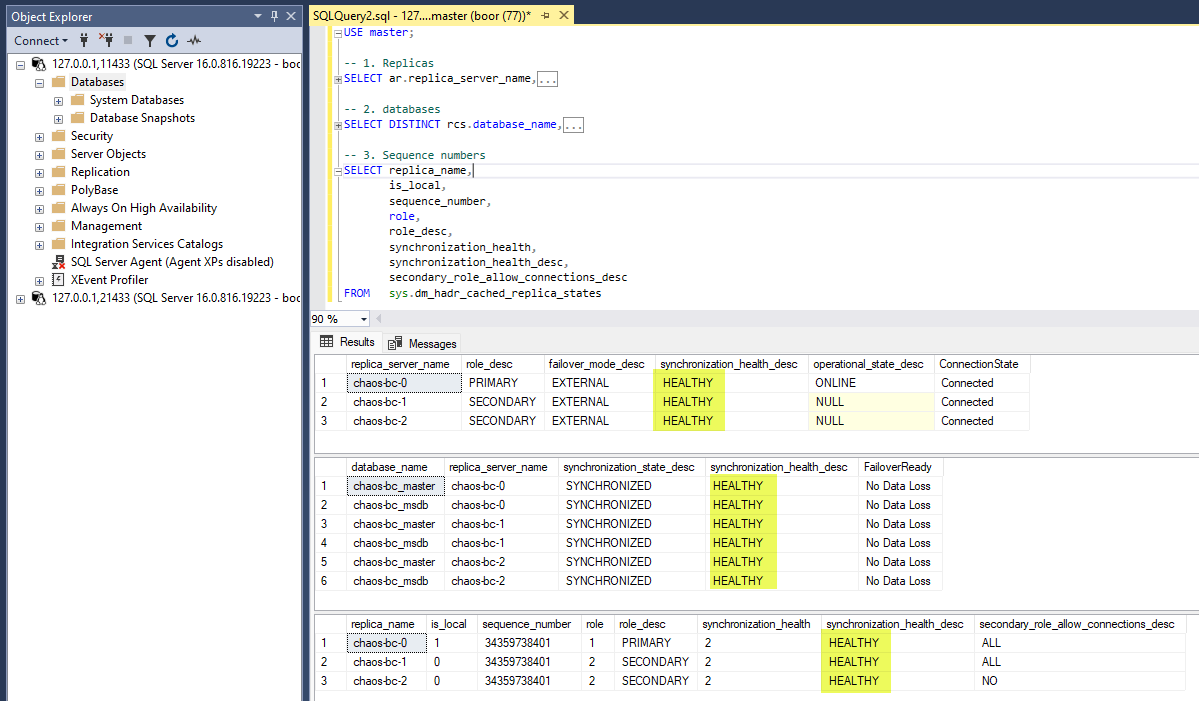

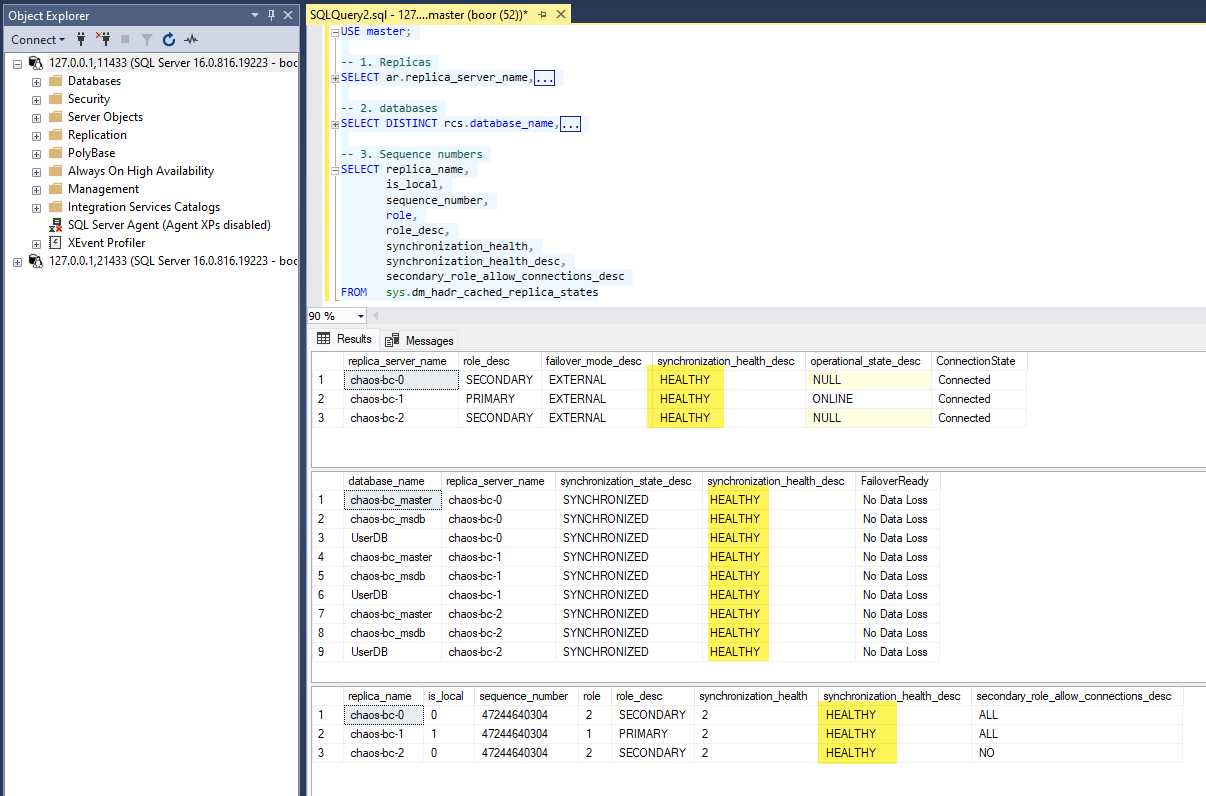

Pre-chaos, all 3 replicas are in a HEALTHY state as expected:

SELECT replica_name,

is_local,

sequence_number,

role,

role_desc,

synchronization_health,

synchronization_health_desc,

secondary_role_allow_connections_desc

FROM sys.dm_hadr_cached_replica_states

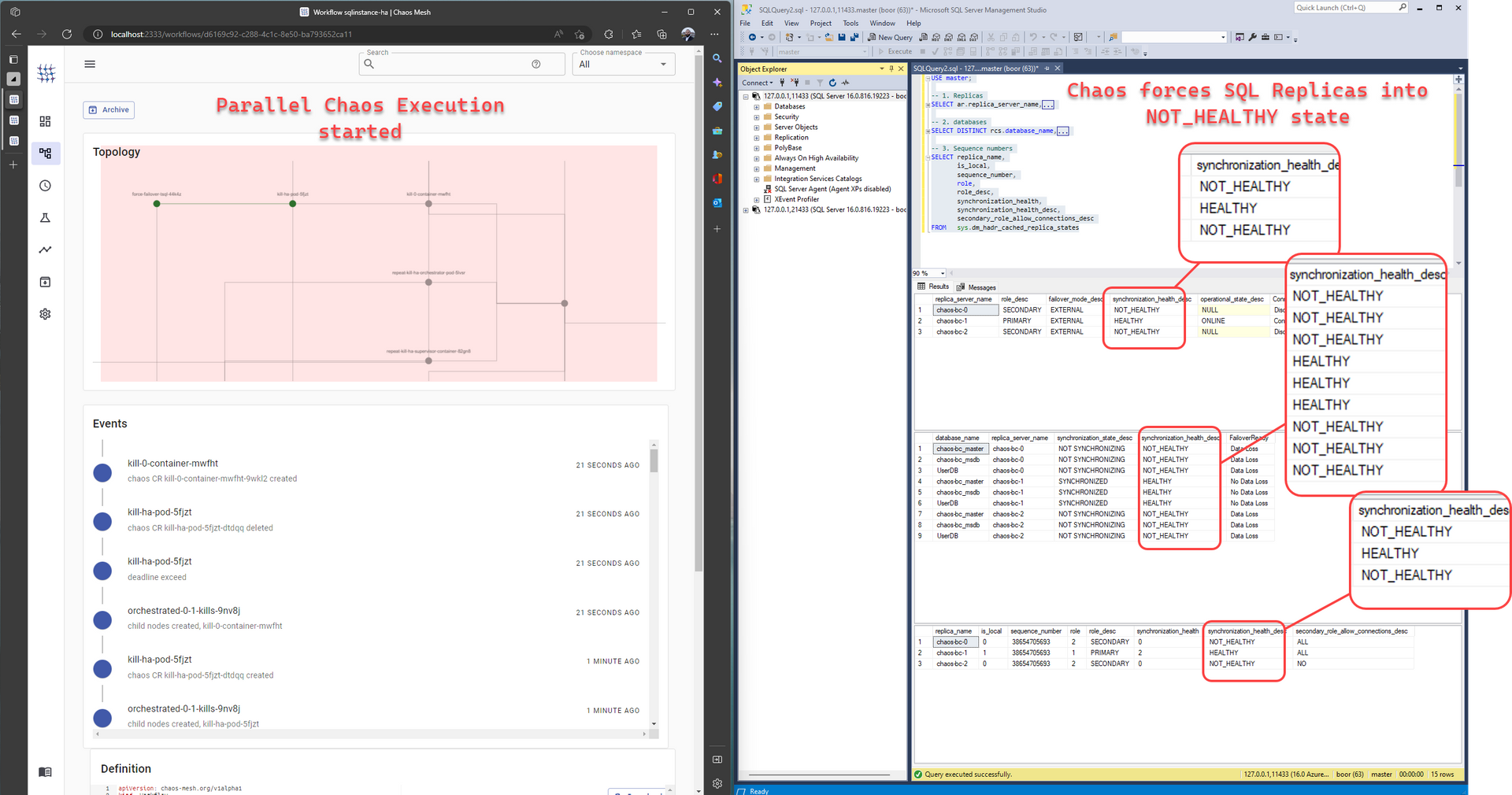

- Around

3:50, Chaos forces 2/3 replicas inNOT_HEALTHYstate:

Essentially, this means that the chaos experiment we've put together is effective at impacting the key system integration points.

The workflow executes for 18 minutes, and is

Completed.After connecting to SQL Server, we notice that despite all of the injected Chaos, the Operator has performed the correct sequence of replica failovers to have all replicas back in a

HEALTHYstate:

And the system is stable once again.

Conclusion

SQL 1, Chaos 0.