Fabric Open Mirroring is really fast, and free (really!)

Open Mirroring is the "easy" button into Fabric.

Ingestion is the hardest part of building a Data Platform:

- Dealing with ingestion lag is painful

- Dealing with compaction is painful

- Tombstoning and schema validation is painful

- Everything needs a lot of infra, money and full time employees

Fabric Open Mirroring is a super clever feature that was built on top of the battle-tested replication engine introduced a few years ago in Synapse Link - which I think has now evolved into Mirrored Databases.

The basic idea is, Open Mirroring gives you a Landing Zone OneLake folder, and you just start blasting Parquet files in there (it also supports CSV/delimited text, but you need to specify schema).

No need to deal with Delta Transaction Protocol pains 🤕, Compaction pains 🤕, or Optimistic concurrency pains 🤕. Literally every language - including Javascript (if it counts) - can write Parquet - it's super accessible.

The writer client can specify fancy change data capture semantics using a __rowMarker__ enum column, or, you can do pure APPEND only workloads - which is what I'm interested in - to squeeze out the absolute maximum amount of throughput.

Best of all, it's free, the compute is totally free, and the storage is free up to a very generous number - see here.

As someone that deals with ingestion headaches at ginormous scale - see here, my mental model is:

💊 Open Mirroring = Painkiller-As-A-Service

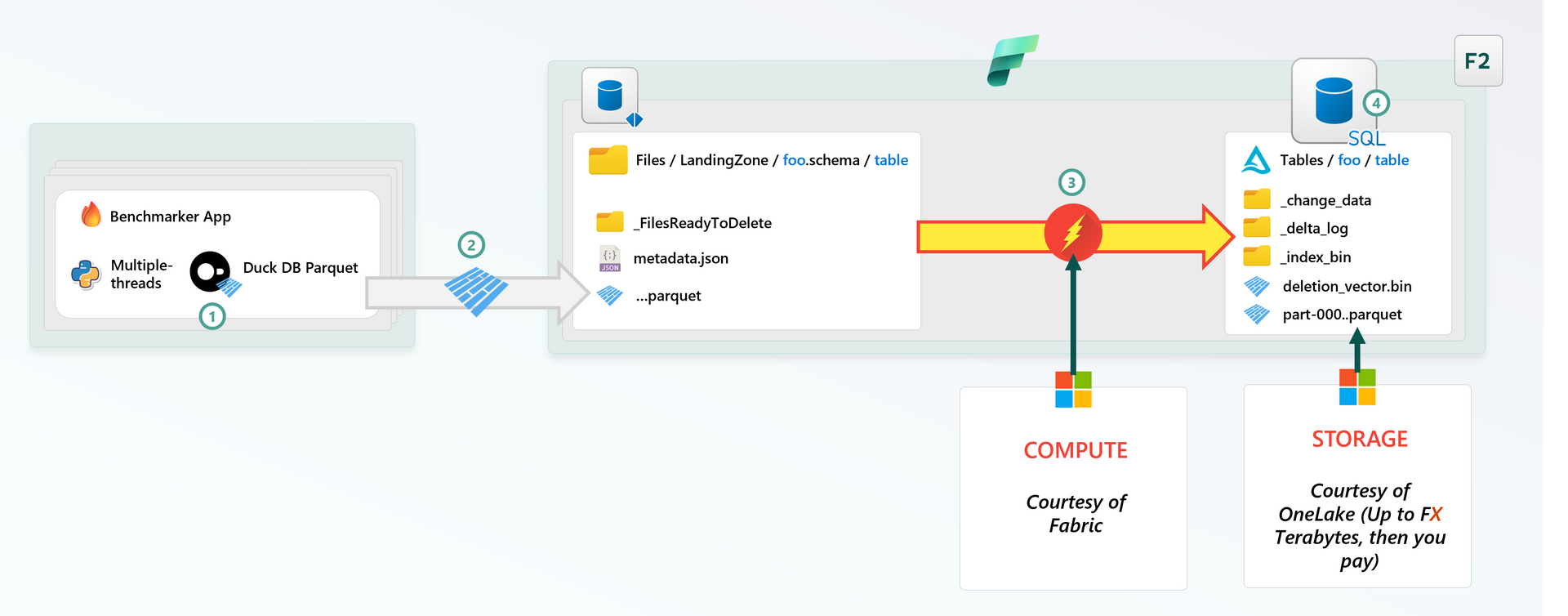

Stress Test Architecture

I wrote a small multi-threaded Python App that uses DuckDB to flush Parquet with various schemas and other tunables into the LandingZone, and then reads the Mirrored Delta tables while diffing with the written files to generate certain Metrics, like Ingestion Lag.

You can run it locally - see code here.

- Multi-threaded App comes up and cooks up Parquet based on the SQL statement

- Each writer thread flushes Parquet to LandingZone concurrently using DuckDB's Parquet Writer for ADLS

- Fabric Open Mirroring "senses" a new file was uploaded (Event Driven, I imagine). Data is compacted into Delta Tables with Deletion Vectors for Merge-On-Read, Delta Change Data Feed, etc.

- Your data is available in Fabric SQL endpoint in near-real time (~30-60 seconds in my runs until I deliberately made the system unstable)

I went up to 1.2 Billion Rows/Minute on an F2 SKU, with 5 identical 32 core Windows Devboxes blasting Parquet simultaneously. The CPU was 100% hot on all machines. It was amazing.

Here's a short demo:

Data Collection Methodology

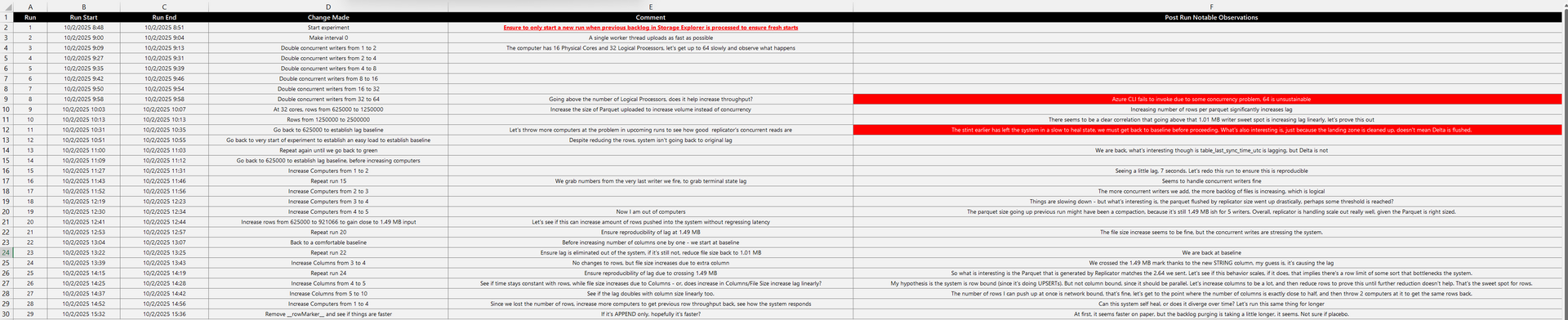

Similar to Physics Experiments, I made one small change at a time to inputs, and observed how the system behaves:

A. Run

B. Run Start

C. Run End

D. Change Made

E. Comment/Thought Process

F. Post Run Notable Observations - RED implies I broke something with my input (AKA Significant Lag and Backlog) and had to let the system self-recover before moving onto the next run

The Excel file above and a small Power BI report is available in results here.

Results

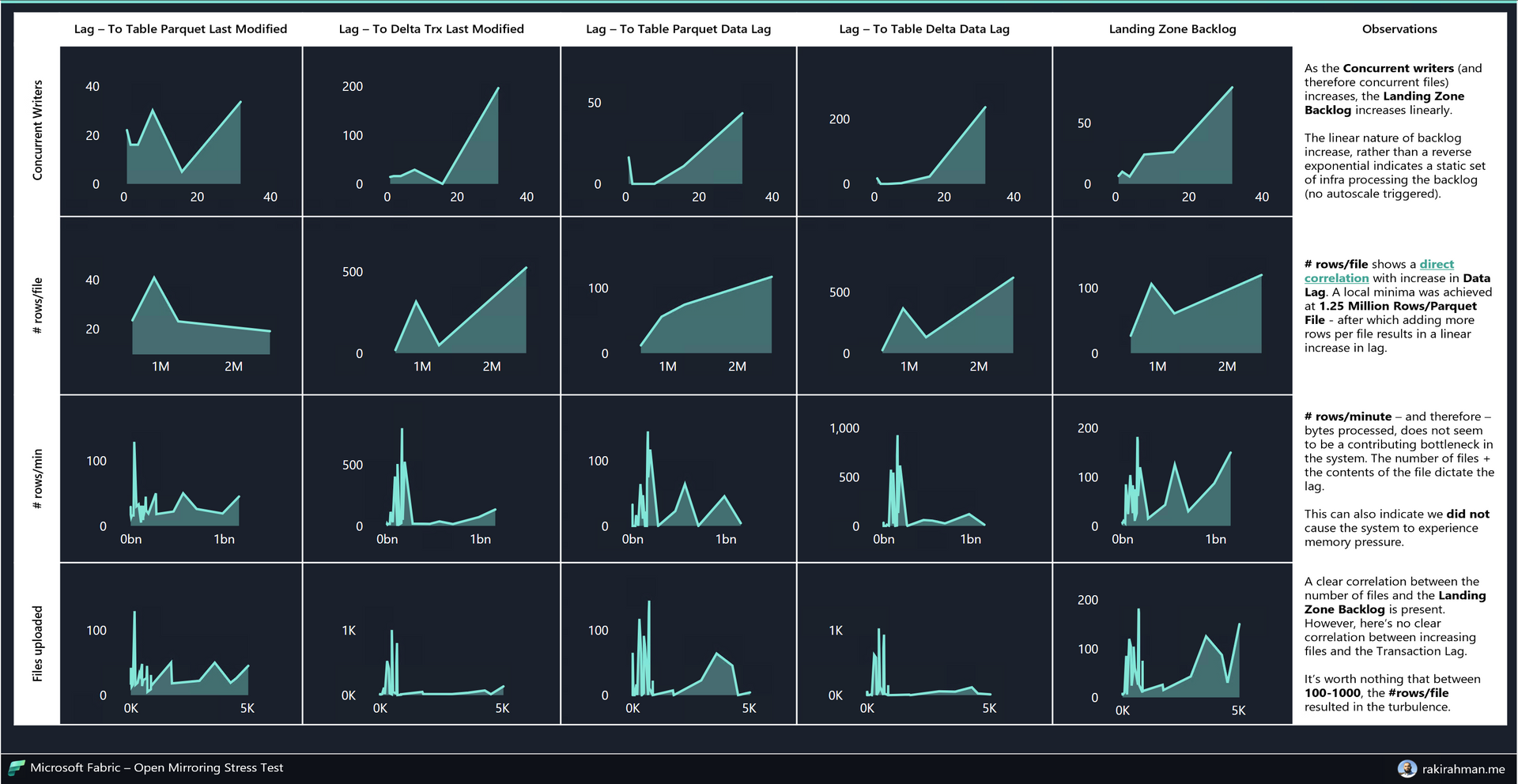

Explanation of the Input-Output variables

| C1 | C2 | C3 |

|---|---|---|

Input | Output | Comment |

| Concurrent Writers | - | Number of concurrent DuckDB writer threads uploading Parquet files |

| # Rows/File | - | Number of rows per Parquet file |

| # Rows/Minute | - | Number of rows uploaded per minute, across all machines |

| Files Uploaded | - | Number of files uploaded across an experiment run |

| Lag - To Table Parquet Last Modified | Scans the Delta Table's Parquet directory to find the last modified parquet file, the idea is, this number tells us if the replication system is "doing stuff" | |

| Lag - To Delta Trx Last Modified | Scans the Delta Transaction log to find the last committed transaction | |

| Lag - To Table Parquet Data Lag | Takes the most recently modified parquet file and reads it to diff the writer timestamp vs the max committed time data column, the idea is, this shows us what lag is in the latest Parquet file, that may or may not have been committed in the Delta Transaction | |

| Lag - To Table Delta Data Lag | Reads the Delta table's max committed time data column, this is what a regular Delta Lake Client will read after the commit is flushed | |

| Landing Zone Backlog | At the end of a writer run, how many backlogged files are present in LandingZone that need to be processed by the mirroring engine |

Observations:

1,167,451,155 rows/minutewith5068Parquet files uploaded in run 21 was the best run, theLag - To Table Delta Data Lag11.3 seconds, which is amazing - it tells me that barring any preview bugs, this thing can scale, hard.- Increasing

# Rows/Filegenerally improves throughput, until I got to1,250,000rows in run 9 - at this point, the latency took a huge hit. I'm not sure why, yet. - The replicator generally replicates Parquet Files with the same size as what the writer client sent (~1.49 MB was what I was sending). In other words, it currently doesn't act like Spark where it tries to buffer until 128 MB is reached, my guess is, it's optimized for latency with an upper-limit flush timeout.

- Just because the

LandingZoneis purged doesn't mean the Delta Transaction will be committed in sync, the 3 things reading, cleaning, and writing are async. - There is definitely a sweet spot to the

Size/encoding/compression of parquet file + # rows + # columns = max throughputthat will make Open Mirroring rip the hardest. I was not able to find this spot empirically this time around (ran out of time during benchmarking, and also, 1.2 Billion Rows/Minute is not too shabby in my books).

Verdict

Even though Mirroring was originally introduced to get data from OLTP systems into OLAP Delta Tables, I think the overall replication feature is a fantastic way to ingest APPEND only workloads too without managing a bunch of expensive Streaming Processing infrastructure that eats up your CU or Azure Credits, similar to the setup I discussed here.

In fact - if I could have my way, I would brandish Open Mirroring as the de-facto ingestion contract to get data into Fabric.

You see, it's pretty hard to beat free 😜