Column level lineage in Fabric Spark with OpenLineage and stashing the lineage in Delta Lake

Why?

When the amount of ETL and tables in your Data Platform reaches a non-trivial volume, you'll realize you'll need a lineage solution to:

- Onboard any new engineer to contribute meaningfully

- Let end-users make sense of the data

"Go read the ETL code" is not a viable strategy. AI-based string search also doesn't let you get a "top-down" view of the system either, if you have 1000s of jobs and queries.

There must be a better solution.

Who?

Many vendors realized this early on:

dbt's most lucrative feature IMO is the built-in DAG-based lineage. It's quite rudimentary (because it's not column level), but super easy to setup - you just have to use dbt for all of your ETL.

Databricks' Unity Catalog has a pretty awesome UI for lineage - see this great guided product demo. But, the API and lineage has a schema that's specific to Databricks - it feels very similar to Purview to me, albeit a little more modern.

AWS seems to have a pretty great looking OpenLineage compatible API and lineage solution. I don't use AWS though.

Purview has an Atlas based lineage model with Purview specific constructs you need to get your head around.

OpenLineage

After doing research for a few months on the Open-Source data model centric lineage solutions out there (see this great blog: Top 5 Open Source Data Lineage Tools), I've come to the conclusion that OpenLineage is the way to go for the following reasons:

The schema and client SDKs are very well written, much better than Atlas, IMO. It very much reminds me of OpenTelemetry, which I'm a fan of.

The author of Parquet - Julien Le Dem created OpenLineage. He knows a thing or two about data.

Purview, despite being built on Atlas, went through great heroic efforts to try and become compatible with OpenLineage. That tells me:

My empirical observation is that, the OpenLineage effort has fizzled away, and the only documented production reference implementation in this blog is not very elegant - it involves Azure Functions and Azure Table for buffering, why can't I just use Spark and Delta (AKA this blog)?

I also don't fully understand why you actually need another service like Purview for lineage and why you can't do it natively on Fabric. The UI can be recreated from the OpenLineage data model (see demo) and the search indexing for term-based fast lookup is solvable problem (e.g. see IndexTable), specially if you want to do offline analysis and can handle a little latency.

Datadog - also a current leader in the Data Observability space is also investing in OpenLineage.

As you'll see in the demo, I found that Fabric Spark actually already ships with OpenLineage installed. So, someone smarter than me has already started thinking ahead.

Since the schema of OpenLineage is open-source, we can pool our knowledge together as humanity and build awesome analysis techniques (queries, SDKs, ML techniques etc.)

The good news is, OpenLineage loves Spark, and you get robust column-level lineage quite easily. This is in contrast to other creative approaches like this in Fabric Warehouse where you use RegEx to parse relationships yourself. Based on my rudimentary understanding of this problem space, this doesn't work for complex SQL for column-level lineage, because you need a robust parser/lexer.

OpenLineage to Delta Lake Ingestion

Spark is perfectly adequate as an engine to do literally anything you can dream of with your data.

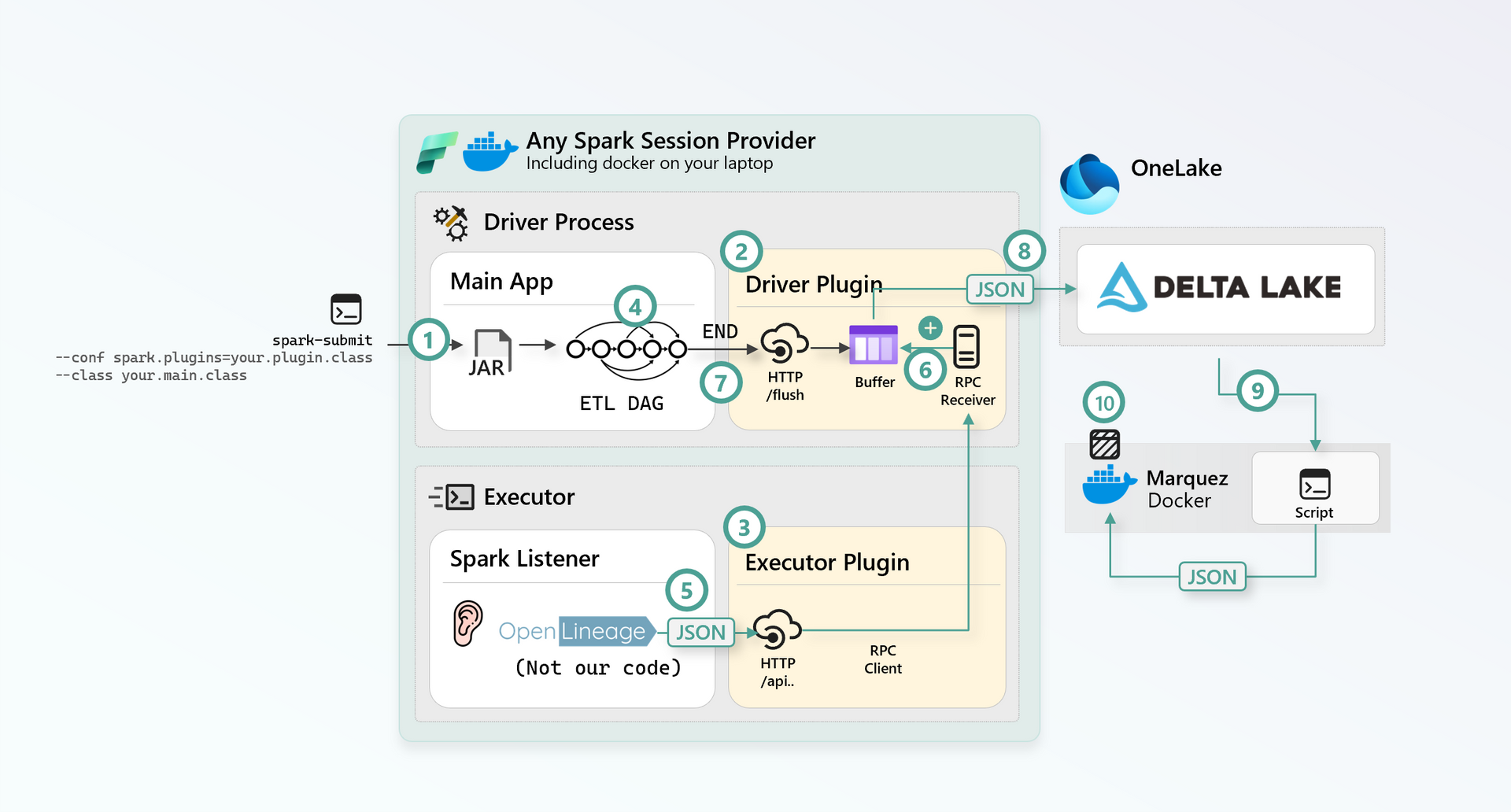

The architecture I came up with so far is as follows:

- You

spark-submitwith configurations for both OpenLineage, and your Spark Plugin - A Spark Plugin for Driver comes up, that hosts a REST API with a

/flushendpoint, and a simple in-memory append-only buffer - An Executor Plugin comes up, that listens on any REST route, and forwards the HTTP call to the Driver via an RPC method,

sendthat can send any serializableObjectacross nodes - Your main app goes off and does some ETL

- The OpenLineage Spark Listener listens for various Spark Engine events, and emits the OpenLineage data model (as JSON), to our REST API per executor node

- Our Executor REST API forwards the entire HTTP payload wholesale via RPC to the Driver, who buffers it

- Your main app finishes ETL and fires a

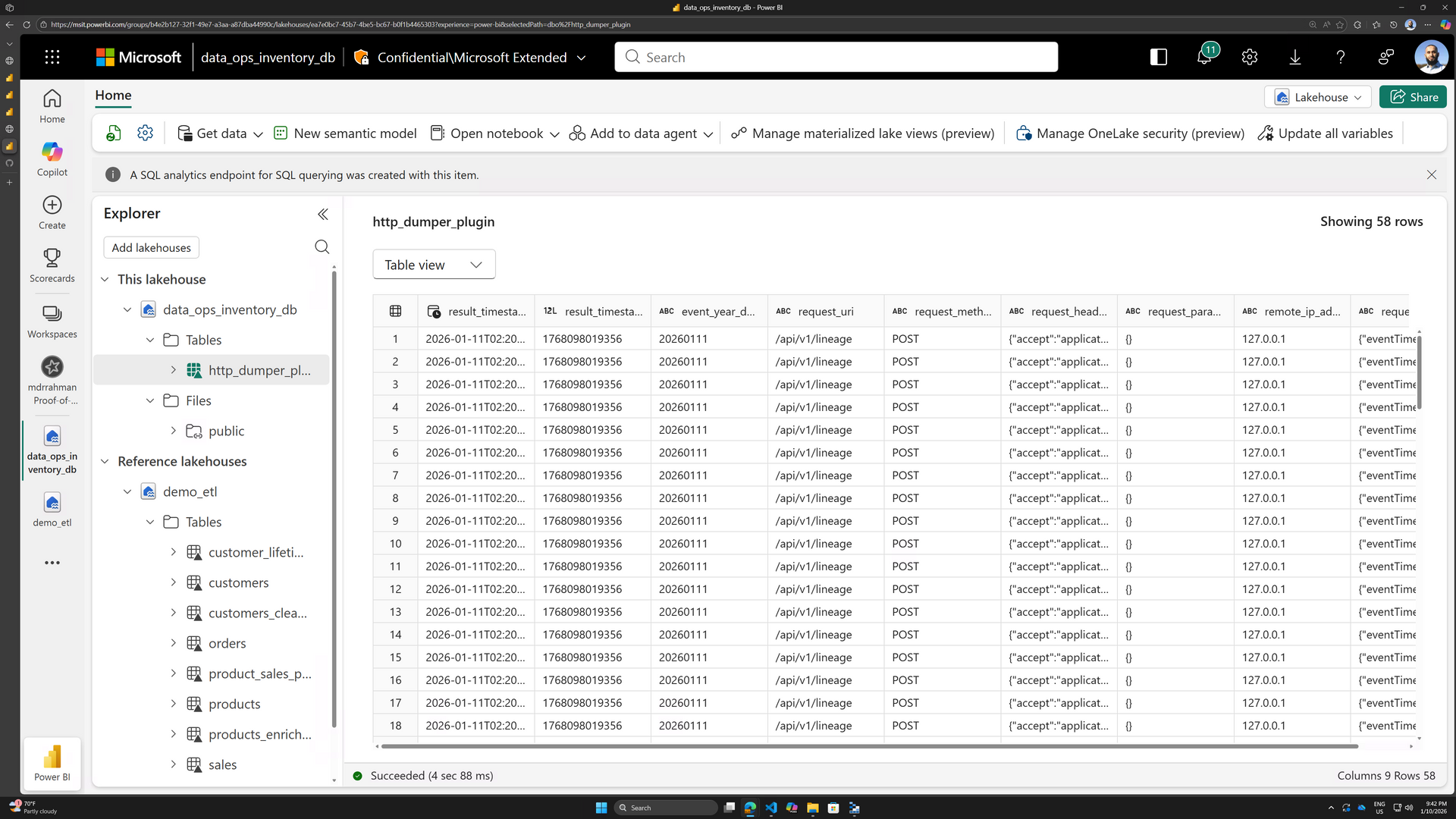

/flushmethod on the Driver - The Driver flushes the buffered JSONs into a Delta Table with the captured HTTP metadata per JSON

- When you're ready to view the lineage, you reconstruct all of those HTTP JSONs from the Delta tables (using SQL queries to filter what timeframe you need)

- You push those into Marquez on Docker Desktop, and there's your column-level lineage

Here's a demo that walks through the details:

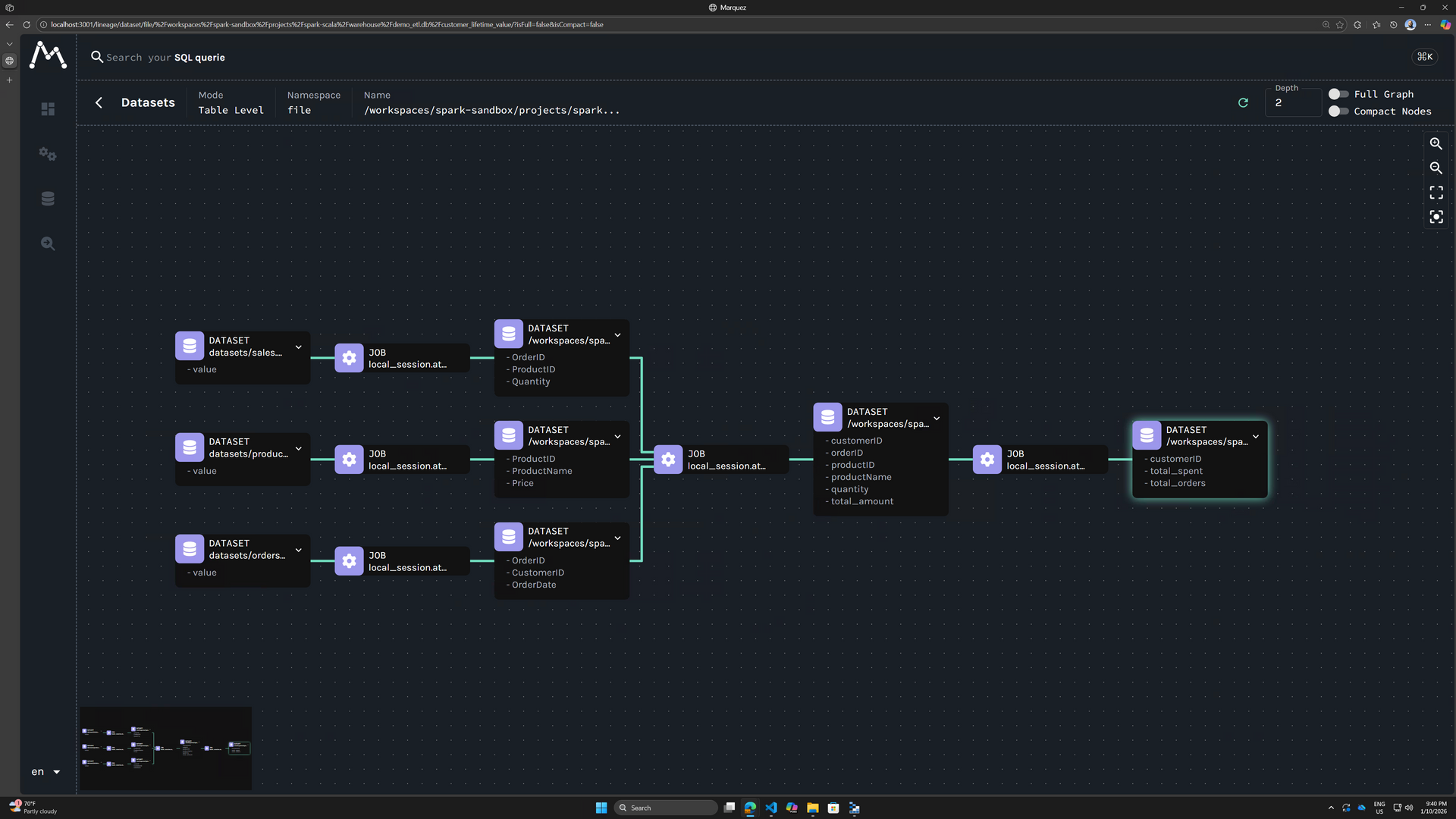

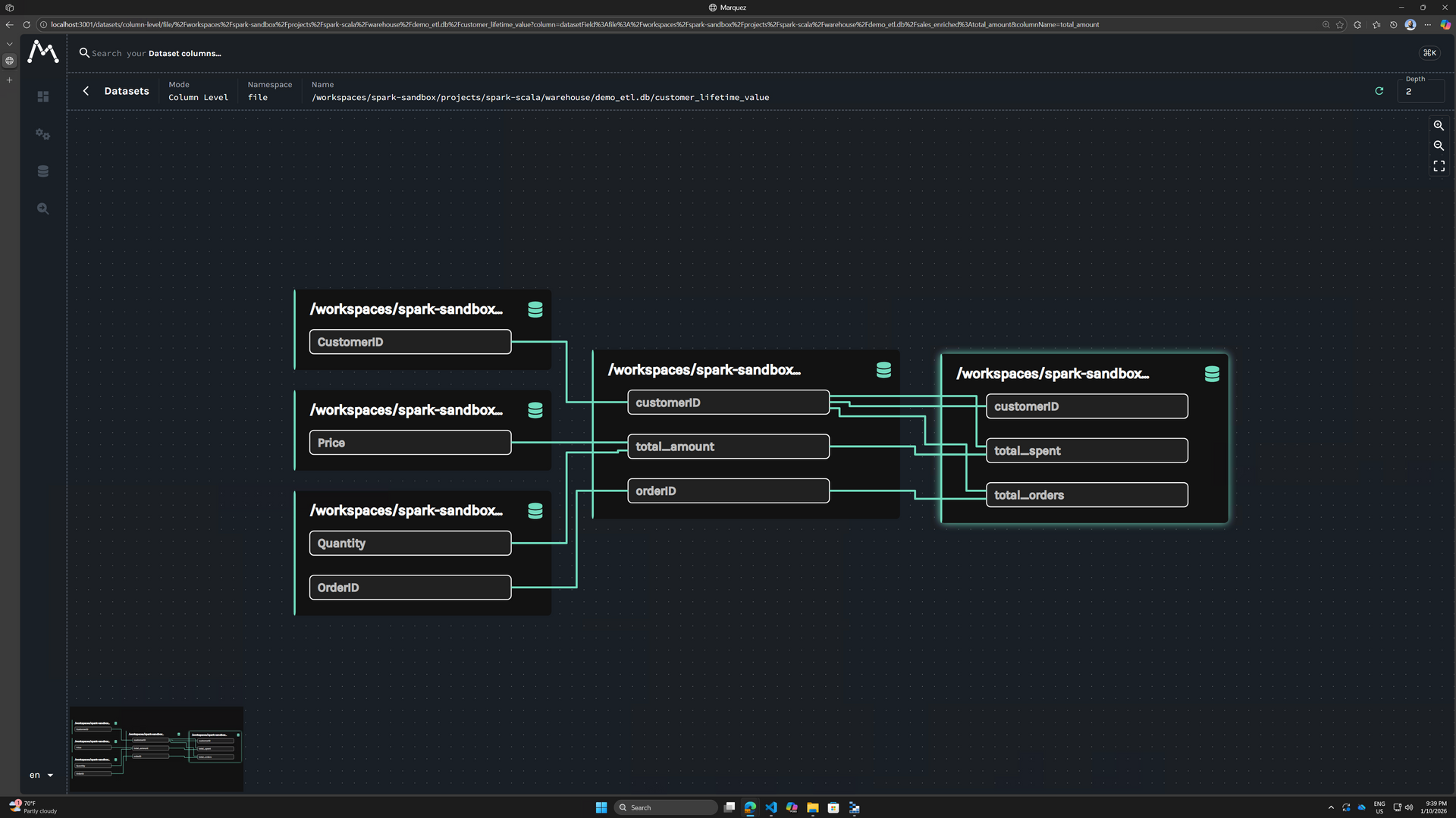

The result:

Repos to reproduce this locally

The video, and the repo READMEs below shows you how you can reproduce this locally:

This repo has the OpenLineage plugin code demo'd above, this PR has the diffs.

A small repo I created after studying OpenLineage tutorials with simple scripts to run Marquez on Docker locally.

Considerations for production

The REST API in the plugin is simple enough where I don't see it causing problems in production. That being said, every piece of code you have to run and manage is a headache, and it'd be ideal if Fabric could someday sink OpenLineage into Delta tables on our behalf (using a Fabric managed Spark Plugin like this blog, or otherwise).

Pulling out of the Delta tables and hydrating Marquez locally is not ideal for large historical volumes. Technically, I can vibe-code a Marquez drop-in replacement in Fabric using the Extensibility Toolkit, but IMO, Fabric should visualize OpenLineage payloads directly as a first-class feature, similar to Marquez - because this will significantly help every single Customer in making sense of their data estate.

Conclusion

As we saw, the actual changes to each ETL job is almost non-existent, you only need that one plugin file and can get deep-visibility right-inside Delta Lake for 1000s of ETL jobs.

It'd be cool if every other engine inside and outside Fabric supported OpenLineage (or any kind of column-level lineage) someday!

But in the meantime, enjoy rich visibility into your Spark code 😊