Querying Event Hub Capture files with Azure Data Explorer

Azure Data Explorer (ADX) has built-in Data Ingestion capabilities from Event Hub - where streaming data is continuously ingested into a Target Table on an ADX database. We can also take advantage of external tables on ADX to query data on Azure Data Lake - in particular, the avro files from Event Hub Capture.

We looked at how to process Capture files with Auto Loader earlier here. This article presents an alternative approach that allows us to query the same set of avro files in place using KQL instead - with the added benefit of not having to specify the payload schema or move data from the data lake.

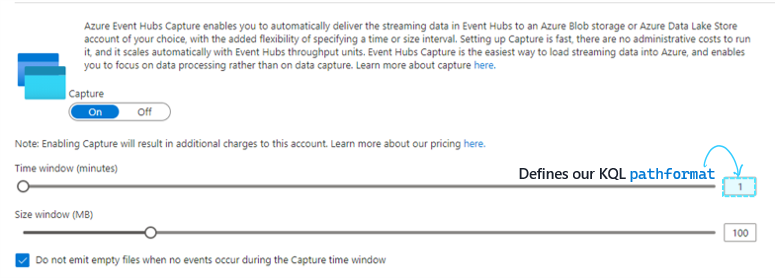

Before we structure our KQL query to define the external table, we make a note of the Time window (minutes) of the Event Hub Capture Frequency - which in our case is every 1 minute:

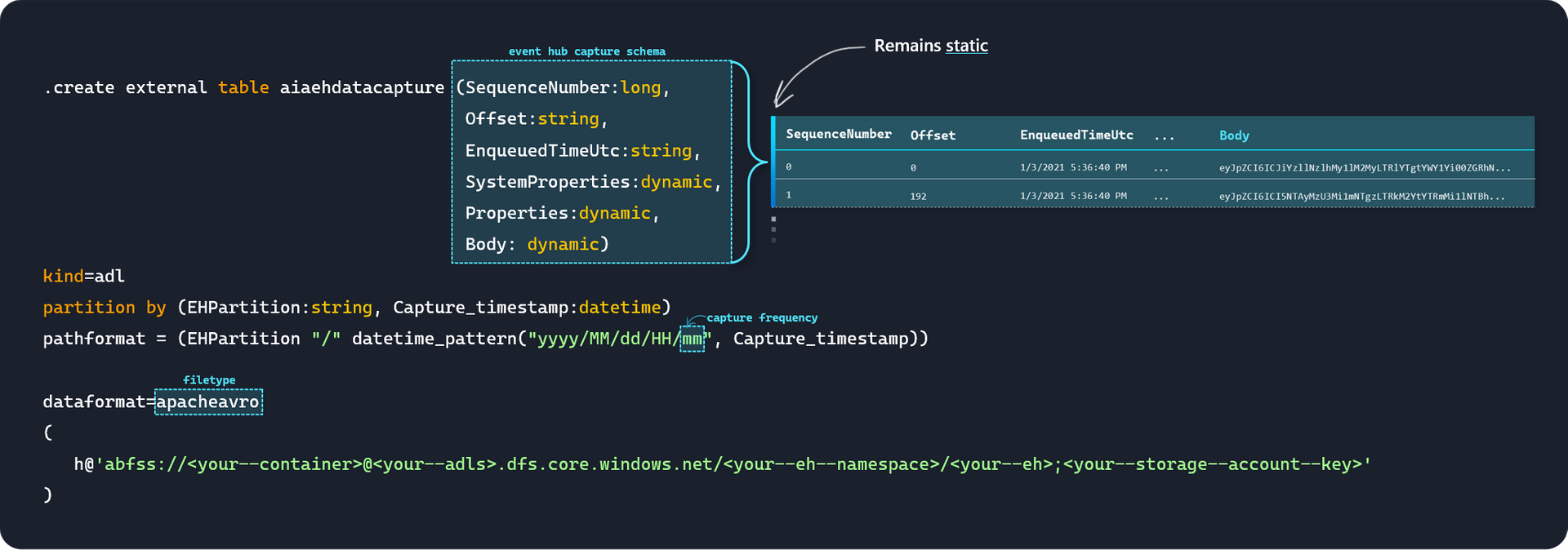

We begin with an existing ADX Database (setup instructions here), and create an external table on top our Data Lake using the command below:

// Create External Table

.create external table your_external_table_name (SequenceNumber:long,

Offset:string,

EnqueuedTimeUtc:string,

SystemProperties:dynamic,

Properties:dynamic,

Body: dynamic)

kind=adl

partition by (EHPartition:string, Capture_timestamp:datetime)

pathformat = (EHPartition "/" datetime_pattern("yyyy/MM/dd/HH/mm", Capture_timestamp))

dataformat=apacheavro

(

h@'abfss://<your--container>@<your--storage--account>.dfs.core.windows.net/<your--event--hub--namespace>/<your--event--hub>;<your--storage--account--key>'

)Note a couple takeaways from here:

We specify the

avroschema (and not theJSONpayload schema that our application dictates) when creating the External Table.The

avroschema isn't likely to change as it's the same for all Event Hubs - meaning we only need to do this once.We specify the following "Virtual columns" via

pathformat, which also defines the Capture Frequency down to minutes granularity (i.e. in alignment with how our Capture is configured).EHPartition: Event Hub PartitionCapture_timestamp: When capture was performed on ADLS

Note: The naming convention here is arbitrary.

We specify the external file format as

avroviadataformat=apacheavro

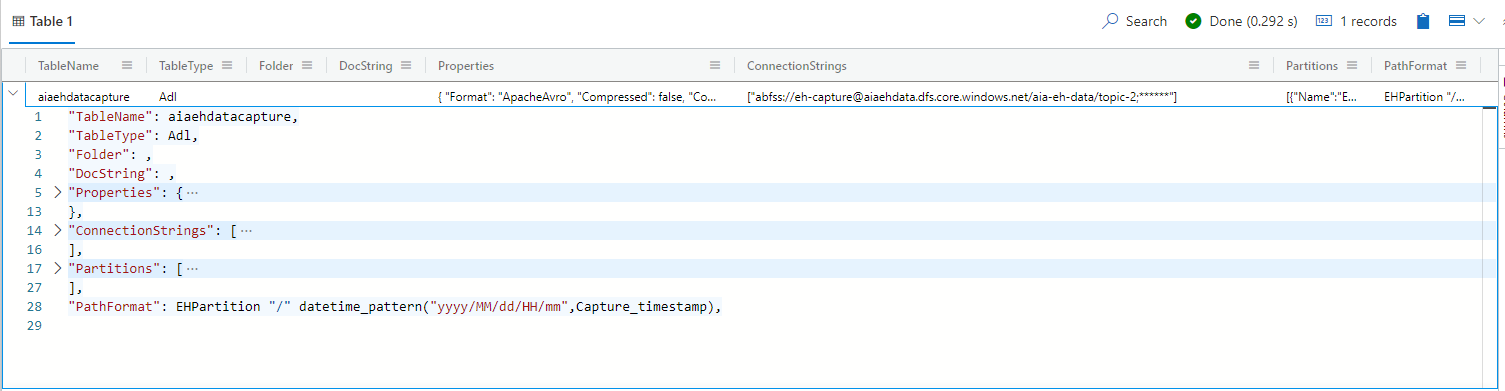

And we see the following confirmation:

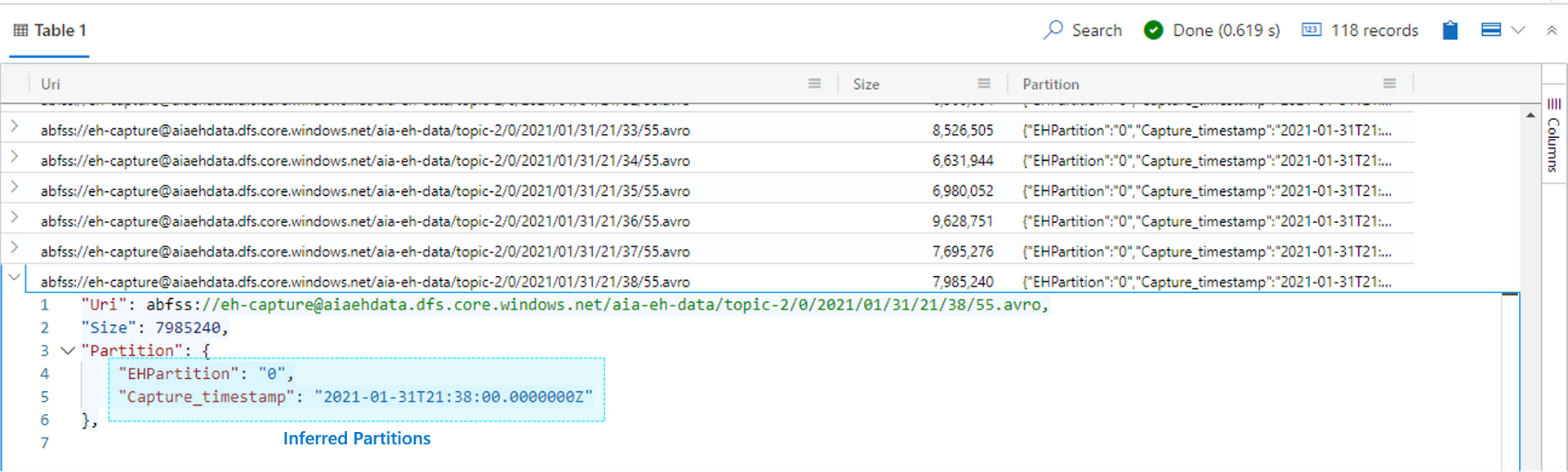

We can list the "External table artifacts" (i.e. the avro files that make up our External Table) and inferred Partitions via the following command:

.show external table your_external_table_name artifactsAnd finally, we can query the contents of our Event Hub Capture JSON payloads without having to specify the schema, thanks to the bag_unpack() Kusto command:

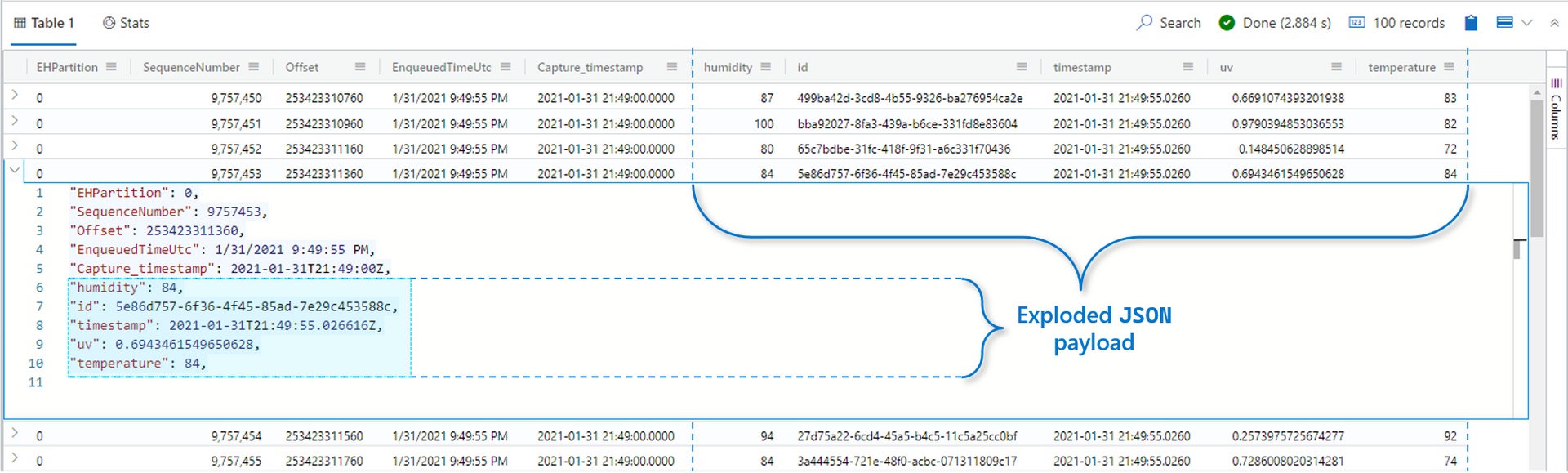

// Query External Table

external_table("your_external_table_name")

| where EHPartition == 0 // Filter on Event Hub Partition

| where Capture_timestamp between (ago(10m) .. now()) // Filter on data captured for the last 10 minutes

| limit 100

| project EHPartition, SequenceNumber, Offset, EnqueuedTimeUtc, Capture_timestamp, Body // "SELECT" statement

| evaluate bag_unpack(Body) // Explode JSON payloadAnd we have our original payloads back, along with the EventData properties in a queryable format.